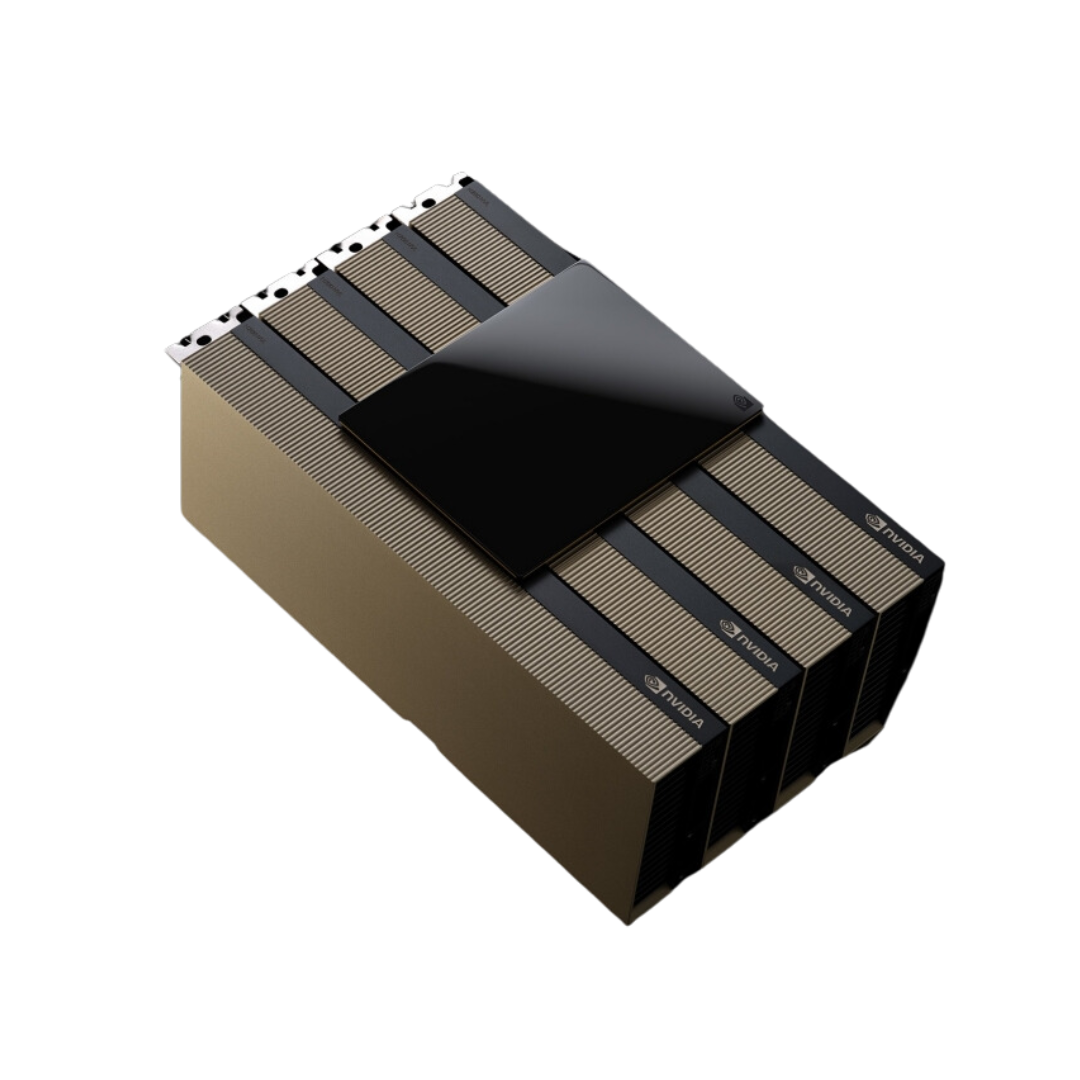

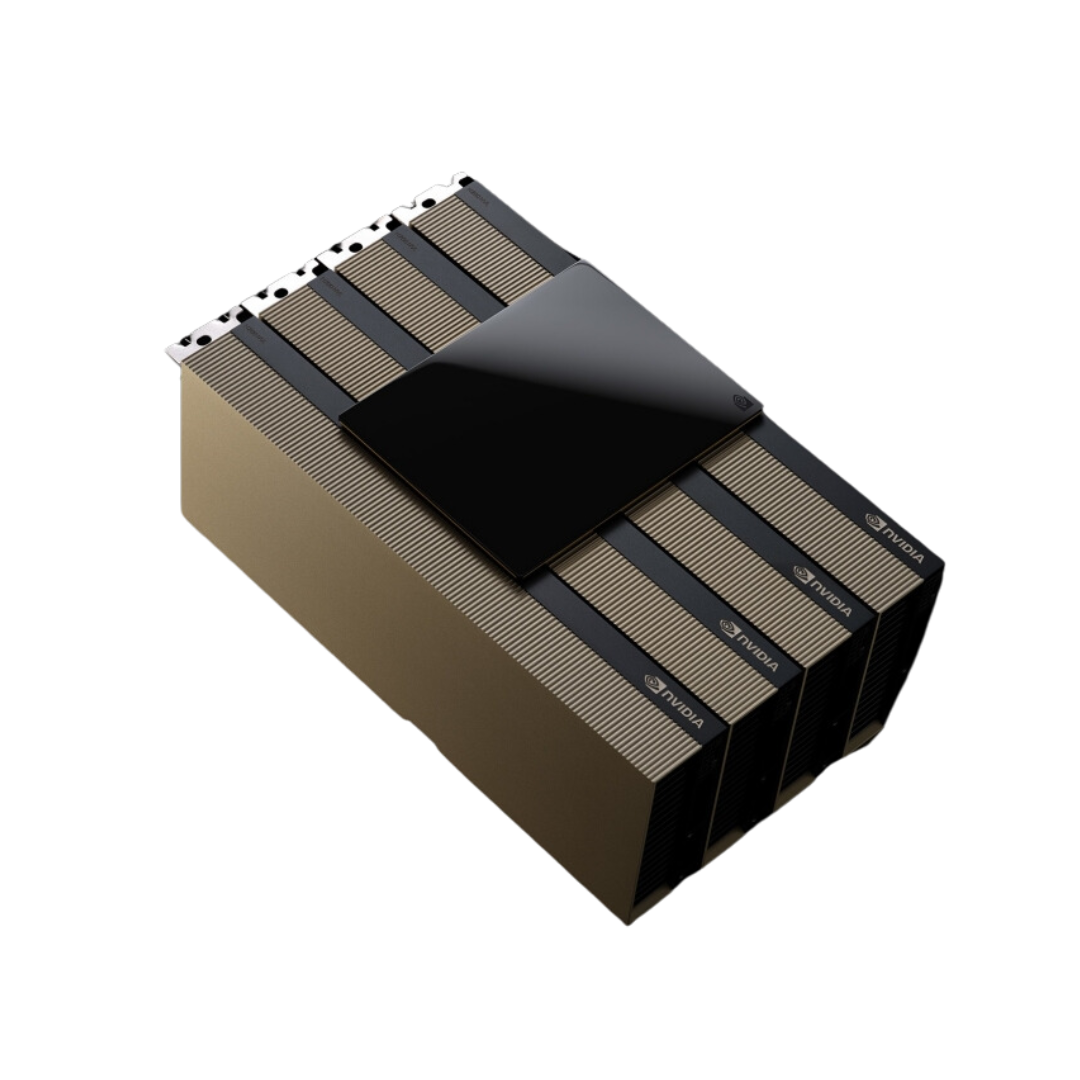

NVIDIA H200: Powerful AI Performance

The NVIDIA H200 is perfect for extensive AI applications. It leverages the NVIDIA Hopper architecture, which integrates cutting-edge features and functionalities, enhancing AI training and inference for larger models. Pricing starts at $3.79 per hour.

| CUDA Cores | 24,576 |

|---|---|

| Tensor Cores | 456 (4th Gen) |

| Architecture | Hopper |

| FP16 Precision Support | Supported |

| FP8 Precision Support | Supported |

| Memory (VRAM) | 143 GB HBM3e |

| Memory Bandwidth | 4.8 TB/s |

| Max Power Consumption | 700 W |

| API & Framework Support | CUDA, OpenCL, TensorRT, PyTorch, TensorFlow |

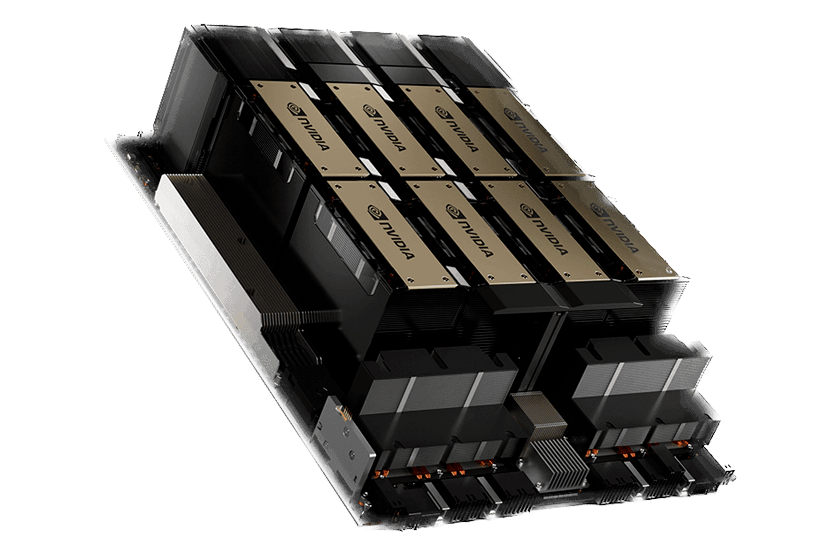

| Virtualization Support | NVIDIA GRID, SR-IOV, Multi-instance GPU (MIG) |

Train and deploy large-scale language models and neural networks faster than ever before with H200’s superior compute capabilities.

Analyze complex datasets and run advanced simulations with exceptional memory bandwidth and compute power.

Perform computational tasks for fields like genomics, climate modeling, and fluid dynamics with unparalleled precision and efficiency.

Transform your workflows with state-of-the-art performance, efficiency, and scalability tailored for the most demanding workloads.

Contact us