October 17, 2025

Building a generative AI product means balancing innovation with raw computational limits. Training multimodal diffusion models, rendering 4K videos, or fine-tuning LLMs can push your infrastructure budget to the breaking point, especially if you rely on hyperscalers.What many founders discover too late is that scaling AI models in the cloud isn’t just about GPU performance. It’s about predictability: knowing exactly how much you’ll pay for every hour of compute, every gigabyte of data transfer, and every storage expansion. That’s where smart infrastructure decisions can make or break your roadmap.

Generative AI workloads are GPU-intensive by nature. Each new model or iteration adds more parameters, longer training cycles, and heavier data requirements. For startups, this usually means racking up tens of thousands of GPU hours per month. At $2–$6 per hour (for mid-range GPUs on AWS or GCP), compute alone can consume over 60% of a startup’s monthly burn rate. And the hidden costs?

In short: the faster your AI model learns, the faster your bill explodes.

Large cloud providers are powerful but built for scale: their scale, not yours. Each service layer adds abstraction, cost, and management overhead. For small dev teams, that means spending time in dashboards, balancing spot instances, or monitoring unpredictable billing. And even when you get GPUs, they’re often virtualized and throttled, which means you’re not getting full performance for the price you pay. That’s the opposite of what agile AI startups need.

Virtual machines are fine for general workloads, but generative AI demands dedicated power. Bare-metal GPUs give you 100% of the hardware — no noisy neighbors, no hypervisor throttling, and no unpredictable latency. It’s the difference between driving your own supercar and renting one with a speed limiter. At 1Legion, every RTX 5090 node is bare-metal and unmetered, delivering full throughput for training, inference, and creative workloads. Each server supports up to 8 GPUs per node, forming the world’s largest RTX 5090 cluster, purpose-built for AI and media.

Scaling smart isn’t just about speed, it’s about control. Many AI founders are shocked when they realize egress, ingress, and storage costs weren’t included in the original quote. 1Legion’s model eliminates that uncertainty.

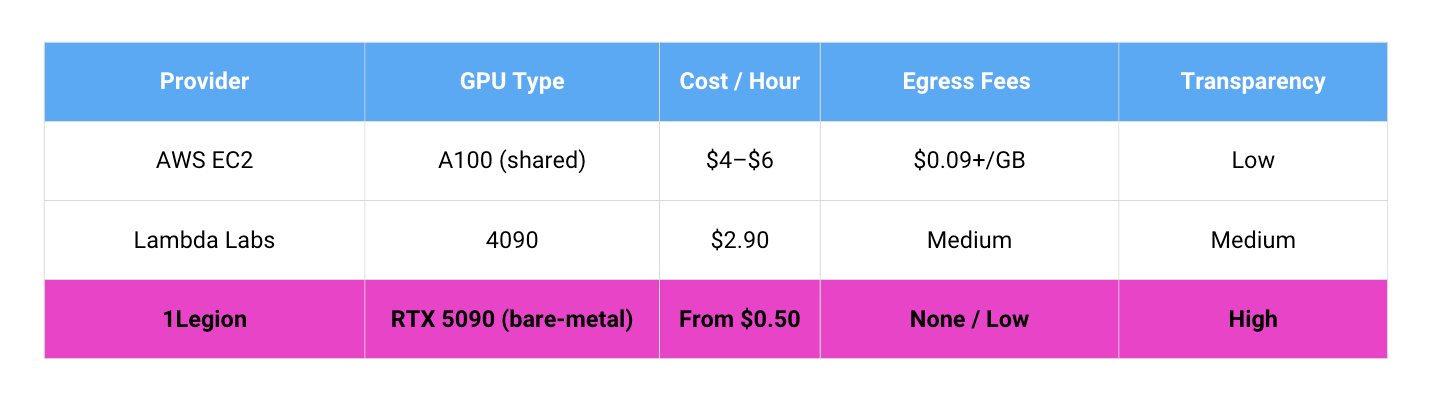

Let’s compare:

When you know what you’ll pay, you can plan your compute like you plan your roadmap, deliberately.

1Legion’s infrastructure is built around the way modern AI teams actually work.

Instead of learning a new cloud ecosystem, you get dedicated GPUs and direct communication with engineers who understand your use case.

Our infrastructure is optimized for real-world creative workloads:

Each node is tuned for maximum output under constant load, ideal for startups iterating quickly on generative tools or products.

Traditional render farms or on-prem clusters sit idle when projects slow down. With 1Legion, you can scale up for model training and scale down for inference or deployment. That flexibility means no wasted resources, no surprise invoices, and no downtime between iterations.

To validate real-world efficiency, our engineering team ran a series of internal benchmark simulations comparing AWS A100 instances and 1Legion’s bare-metal RTX 5090 nodes. The workloads were based on typical diffusion-model training and generative video rendering pipelines.

Setup parameters:

Results summary:

These controlled tests confirmed that bare-metal RTX 5090 nodes can deliver comparable, or better, training stability while reducing total cost of ownership by nearly 50%. All simulations were performed under identical dataset size, batch configuration, and runtime parameters to ensure a fair comparison.In other words: when you remove virtualization overhead and hidden fees, performance scales linearly and your budget stops bleeding.

When selecting where to run your next training cycle, ask yourself:

- Am I getting full GPU performance or a shared fraction?

- Do I know my total cost, including storage and egress?

- Can I deploy and test quickly without long contracts?

- Do I have access to real human support if something fails?

If any of those answers are no, you’re probably paying more than you should.

At 1Legion, we built our GPU infrastructure for creators and engineers, not accountants. You get the power of the world’s largest RTX 5090 cluster with clear pricing, real-time onboarding, and performance that keeps up with your imagination.