August 1, 2025

As artificial intelligence, machine learning, and data-driven innovation continue to evolve, the infrastructure behind it all becomes increasingly important. One key decision that developers, researchers, and businesses face today is whether to invest in on-premise GPUs or leverage cloud GPU services.

At first glance, it might seem obvious—just buy the best hardware you can afford and run your workloads in-house. But as use cases grow more complex, and demands for flexibility and scalability increase, many are turning to cloud solutions. So, which path makes more sense for your needs?

Let’s dive into both options and explore the real-world pros, cons, and best use cases—so you can make an informed decision.

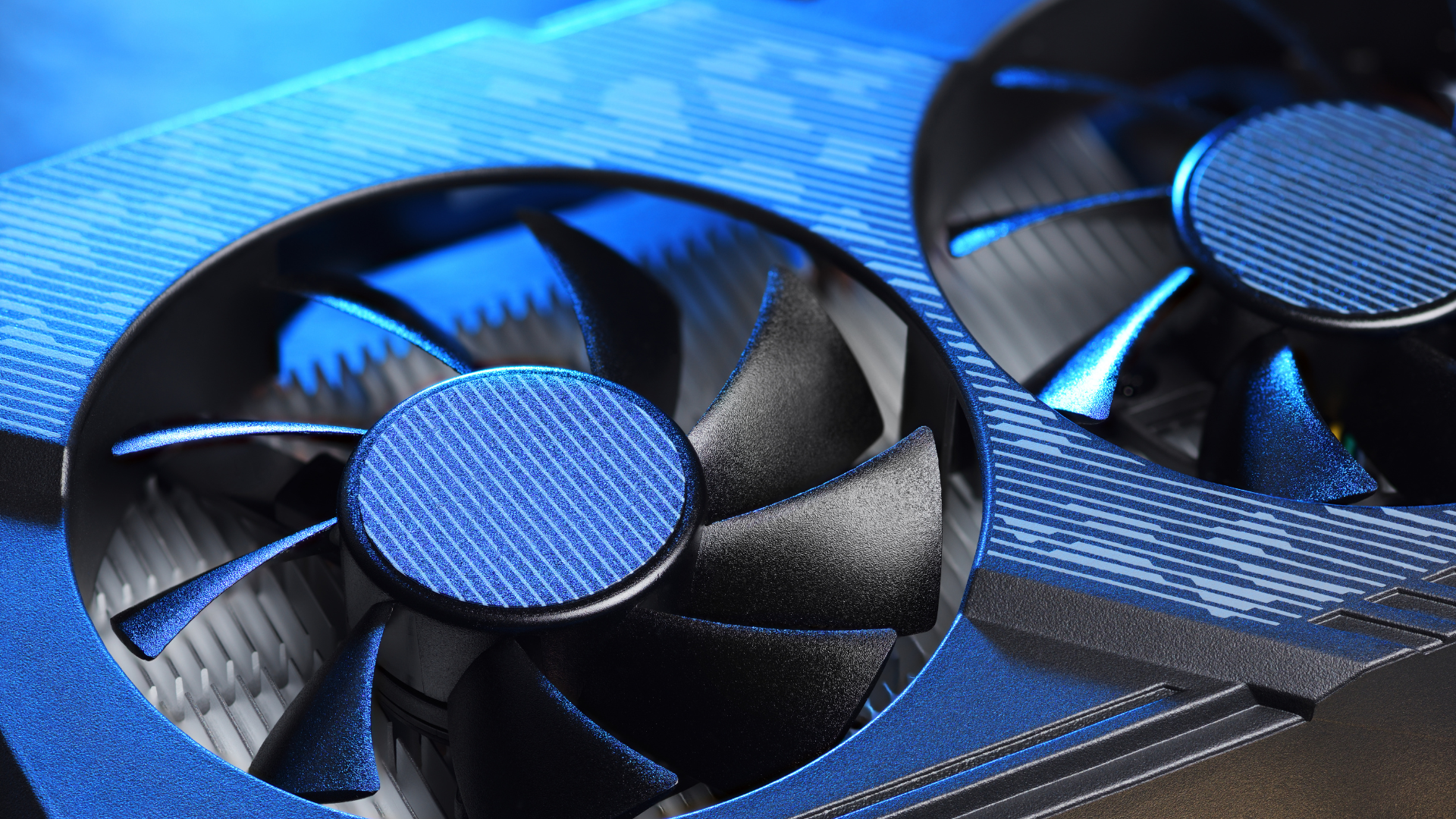

Owning your own GPU infrastructure gives you complete control—over performance, security, and usage. If you’re running deep learning models every day or need to guarantee consistent speed and access, on-premise GPUs offer peace of mind.

Here’s where on-premise GPUs shine:

However, there are caveats. High upfront costs, ongoing maintenance, energy consumption, and hardware depreciation can make on-premise solutions less appealing for smaller teams or fast-moving startups.

Cloud GPUs are gaining traction for a reason: they let you scale up fast, access cutting-edge hardware, and avoid the headache of maintaining physical infrastructure.

Platforms like 1Legion offer on-demand access to high-performance GPUs such as the RTX 5090, A100, and H100—ideal for AI model training, inference, and rendering. No need to wait weeks for new hardware to arrive. No need to hire a dedicated IT team.

What makes cloud GPUs compelling:

That said, cloud costs can add up over time—especially if you’re using GPU resources daily or running long training sessions. Data transfer fees, storage, and egress charges can also surprise you if not managed properly.

There’s no one-size-fits-all answer, but here are a few questions to help guide your decision:

Increasingly, organizations are choosing a hybrid model—running part of their workloads on local GPUs and spinning up cloud resources when needed.

For example, you might:

This hybrid strategy offers control, performance, and flexibility all at once. And with providers like 1Legion, you can manage both sides of your infrastructure easily and transparently.

Whether you’re building the next breakthrough AI model, launching a generative art platform, or training LLMs at scale, choosing the right GPU strategy is essential.

On-premise hardware gives you control, performance, and cost stability—at the price of high upfront investment and maintenance. Cloud GPUs offer agility, simplicity, and scalability, but they can become expensive over time if not managed strategically.

The answer? It depends on your workload, budget, team size, and how fast you want to move.

And remember: you don’t have to choose just one.

At 1Legion, we provide both short-term cloud GPU rentals and long-term infrastructure solutions tailored to your needs. Whether you're just starting or scaling to enterprise levels, we’re here to help you power your next AI project with high-performance compute—on your terms.

Let’s talk about what works best for you.