December 3, 2025

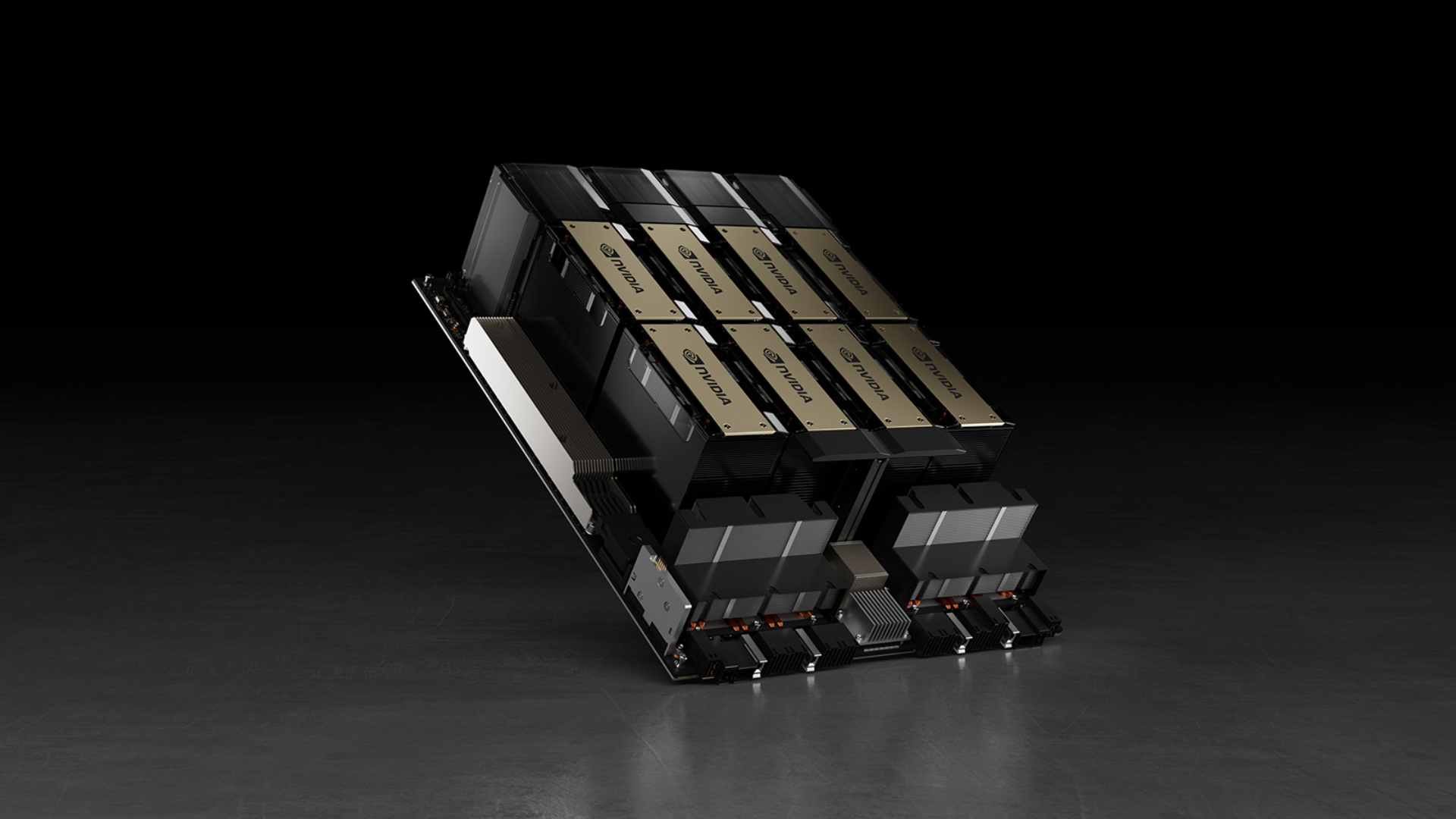

The NVIDIA H100 is widely recognized as the most advanced GPU available today. But while it powers frontier LLMs, large-scale multimodal systems, and complex distributed training clusters, the decision to adopt an H100 isn’t simply about using the “most powerful” chip. It’s about choosing the architecture that correctly matches the moment your workload is in, technically, strategically, and operationally.

For most teams, the shift toward H100 is gradual. It begins with experimentation on consumer GPUs like the RTX 5090, moves into more complex training loops, and eventually reaches a point where the model’s scale or architecture requires hardware that goes beyond raw CUDA throughput.

This guide breaks down why and when the H100 becomes the right choice, grounding the explanation in real workloads from generative AI, video diffusion, VFX rendering, multimodal modeling, and large-scale media pipelines.

The goal is simple: help you understand the technical moments in which Hopper architecture becomes not just helpful, but necessary.

Teams don’t choose the H100 because it’s “high-end”, they choose it because certain workloads stop being efficient, stable, or feasible on consumer GPU architecture. Below are the core engineering reasons why Hopper becomes the correct tool.

What truly differentiates the H100 is its ability to dynamically operate in FP8/FP16, allowing large transformers to train with materially higher throughput while maintaining stability. This matters when your model is large enough that activation memory, attention depth, and tensor operations become the bottleneck instead of compute.

Teams building models above ~70B parameters, or multimodal transformers with heavy attention patterns, see significant gains here. FP8 lets you increase batch size, push more parallelism, and accelerate convergence without rewriting your architecture.

If your model is fundamentally transformer-first and increasingly deep, FP8 isn’t a small advantage, it becomes foundational.

As soon as your model stops fitting comfortably on a single GPU, you move into the world of distribution, and this is where consumer GPUs hit structural limits. Without NVLink, cross-GPU synchronization becomes slow and noisy. Without NVSwitch, scaling beyond 4–8 GPUs becomes inefficient or unstable. H100 solves this with:

This is essential for workloads involving tensor parallelism, long-context transformers, sharded attention layers, and MoE routing. Without NVLink or NVSwitch, these architectures simply do not scale well.

Some models grow beyond the point where CUDA performance alone is enough. Video-language transformers, long-context models, multi-branch diffusion pipelines, and high-dimensional multimodal encoders push memory and communication harder than consumer GPUs are built for. H100 supports:

If you’re building anything resembling a frontier-level generative model, especially in multimodal or video domains, the H100 is less about speed and more about feasibility. It enables architectures that can’t run efficiently elsewhere.

Teams often assume that “H100 = expensive,” but in many scenarios the opposite is true. When FP8 allows larger batches, NVLink reduces synchronization overhead, and Hopper tensor cores converge models faster, the total cost per training objective drops.

If your metric is:

The key is scale: once a model becomes large enough that efficiency compounds, Hopper becomes the rational economic choice.

The transition to H100 typically doesn’t come from ambition, it comes from practical signals inside your training pipeline. Most teams recognize these moments naturally as their workloads mature.

One of the earliest signals is when your model technically “fits” inside a 5090, but not efficiently. You reduce batch size, enable checkpointing, or restructure layers, but each workaround slows development or destabilizes training. This is the moment where the H100’s HBM3 bandwidth and multi-GPU architecture make training not only possible, but predictable. When the model fits on paper but not in practice, you’ve crossed into H100 territory.

Most teams begin with fine-tuning and experimentation in the 5090s. This is ideal for:

But pre-training is fundamentally different. You’re no longer adapting a model, you’re building one. And pre-training requires:

Once you enter this stage, H100 becomes the only realistic choice.

For many teams, the bottleneck becomes time: experiments take too long, iterations slow, and the entire product roadmap starts bending around compute speed. This is especially common in GenAI for video, simulation-driven VFX workflows, and applied research teams pushing new architectures.

H100 reduces multi-day experiments to hours, not because it’s “fast,” but because its architecture supports consistent high-throughput across large batches and distributed setups. If iteration speed affects your ability to ship, then the H100 impacts the business as much as the model.

Video-language transformers, temporal attention layers, and multimodal embeddings are extremely demanding. They require:

Consumer GPUs handle inference and small-scale testing well, but full training quickly becomes unstable or too slow to be practical. If your product involves video intelligence, video generation, motion models, or multimodal embeddings, Hopper is usually the correct architecture far earlier than in text-only AI.

Once your default training strategy involves model sharding, tensor parallelism, or expert routing, the interconnect becomes the constraint.

Consumer GPUs were never designed for this type of workload. H100 clusters eliminate those bottlenecks and make distributed training predictable and scalable. If your architecture relies on GPUs working together, not independently, the H100 is the right choice.

In some markets, enterprise AI, medical AI, financial AI, broadcast AI, the infrastructure behind your model matters to stakeholders. H100 clusters send a signal of:

It’s not about showing off, it’s about matching technical ambition with appropriate compute.

There’s a moment where Hopper stops being the “premium GPU” and becomes the “smart GPU.” This happens when:

When your cost metric focuses on the training goal rather than GPU-hour pricing, H100 often becomes the cost-efficient GPU.

The H100 only delivers its full potential when deployed in the correct environment. 1Legion was built specifically for this. We run:

This means you can start fast on 5090, scale responsibly, and transition to H100 the moment your architecture requires it, without friction, without lock-in, and with engineering guidance at each stage.

The H100 isn’t the starting point, it’s the scaling point. You choose the H100 when:

The 5090 remains exceptional for rapid prototyping, high-throughput GenAI, VFX, diffusion, and media workloads. But once your workload crosses into true large-scale AI, the H100 is not just helpful, it becomes the only tool built for what you’re trying to achieve.

And with 1Legion, you never need to choose prematurely. You scale into the H100 exactly when the workload demands it. Contact our Engineers here.